INTRODUCTION

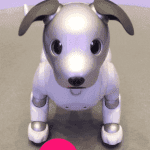

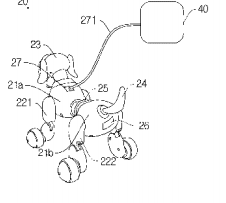

Recent advancements in computation, robotics, machine learning communication, and miniaturization technologies are turning futuristic visions of compassionate intelligent devices into reality. Of these, AI is the most far-reaching technological advancement that has the potential to fundamentally alter our lives. The combination of AI and Robotics is resulting in the development of several intelligent devices that could meet the increasing demand of humankind.