Introduction

Augmented reality (AR) is one of the recent innovations making inroads into several markets, including healthcare. AR takes digital or computer-generated information such as audio, video, images and touch or haptic sensations and overlays them in a real-time environment. AR offers feasible solutions to many challenges within the health care system and, as such, offers numerous opportunities for its implementation in various areas, such as medical training, assistance with surgeries, rehabilitation etc. AR innovations can assist medical personnel improve their ability to diagnose, treat and perform surgery on their patients more accurately.

Some of the pioneering AR solutions aimed at changing the face of healthcare to meet the challenges faced by the users are discussed below.

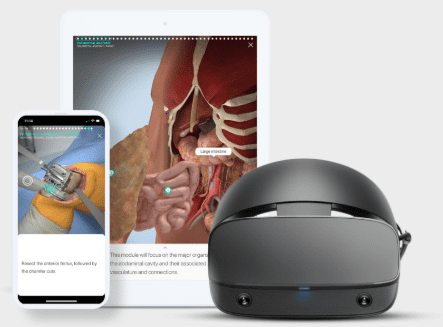

Medical Education / Training

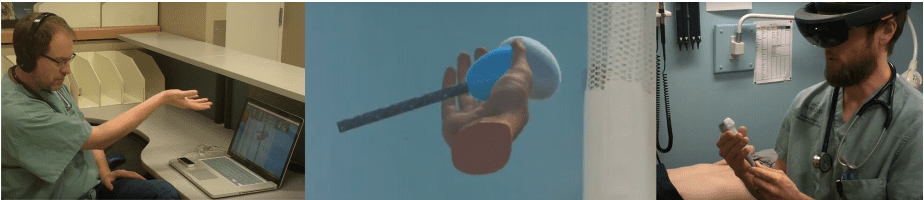

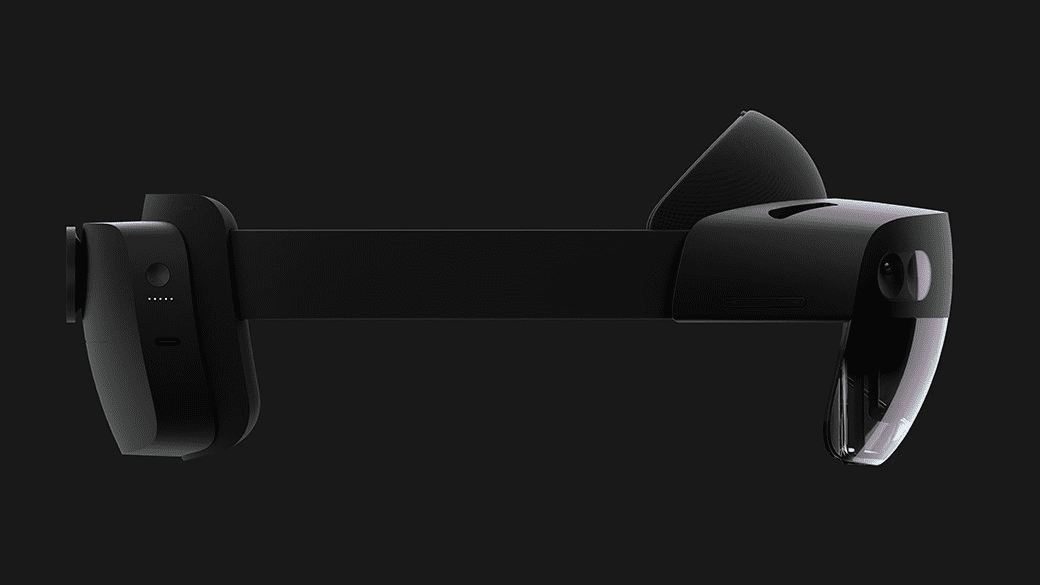

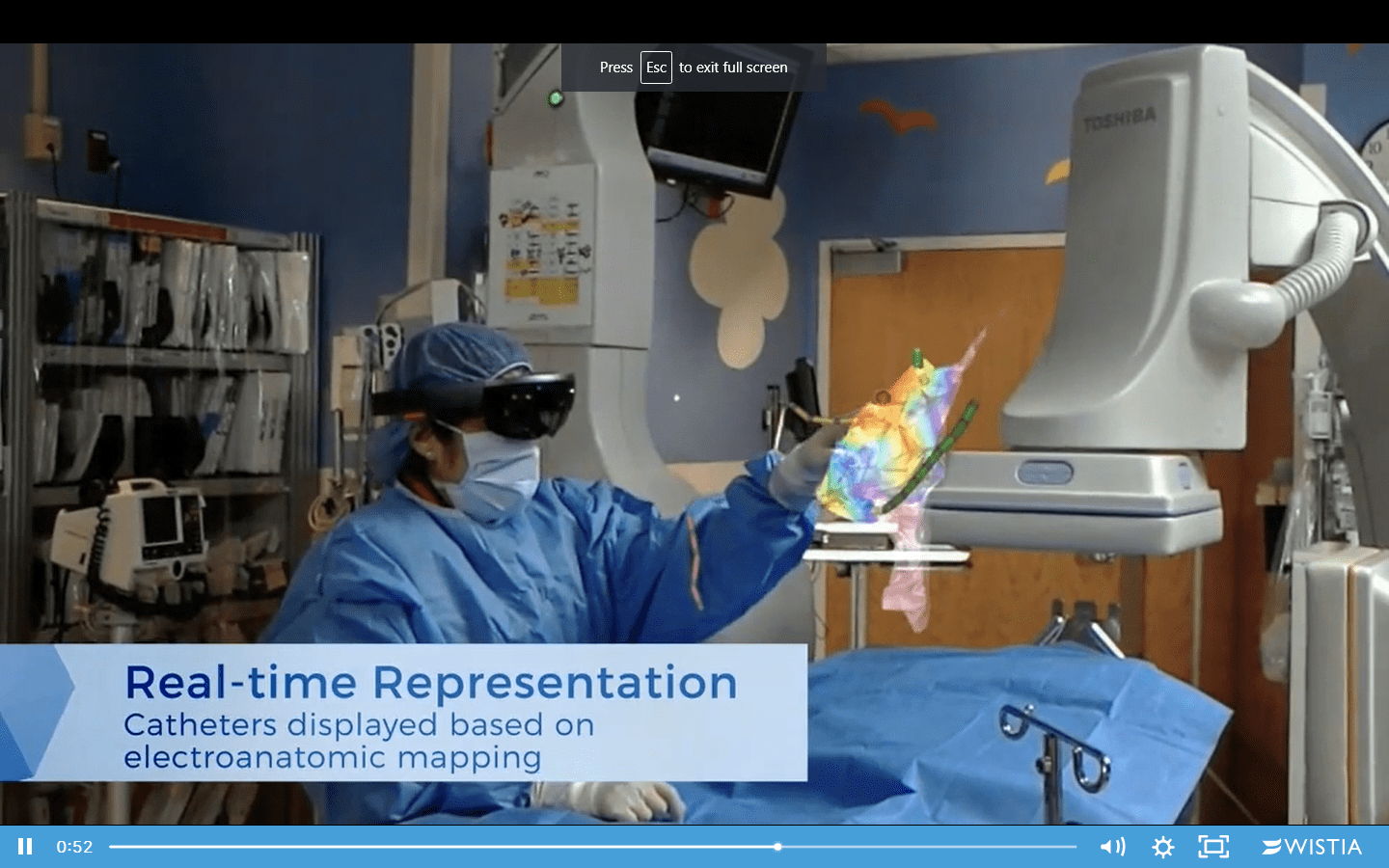

AR has a deep impact on medical training, with applications ranging from 3D visualizations to bringing anatomical learning to life. AR applications project extensive information, visual 3D structures and links onto the traditional pages of medical textbooks for training in, say, anatomy. Recent hardware platforms, such as the HoloLens glasses by Microsoft, have started supporting medical education applications. With the use of hand gestures, it allows the deformation, fly-by view, and other interactions with 3D models to reveal hidden organs [1, 2].

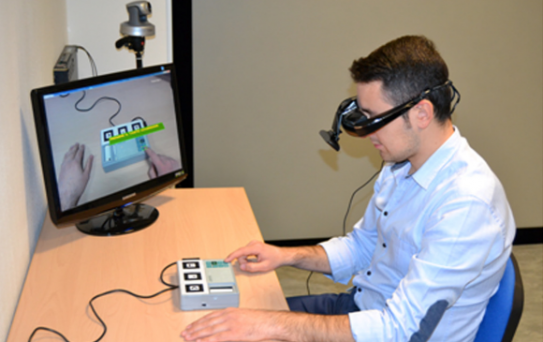

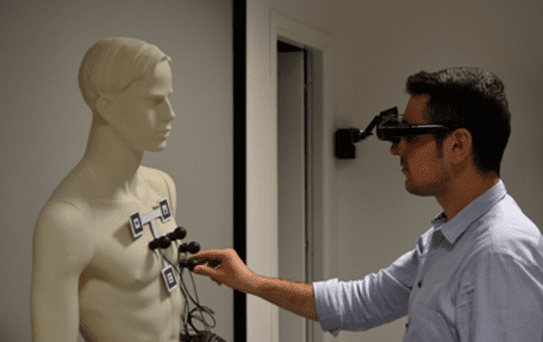

Vimedix™, from CAE Healthcare, is used for training students in echocardiography. It consists of a mannequin and a transducer transthoracic or transesophageal echocardiogram. It enables healthcare professionals to see how an ultrasound beam cuts through human anatomy in real-time. CAE Healthcare has begun to integrate HoloLens in their technology to provide the ability to view the images with glasses, unrestricted from the dimensions of the screen [3].

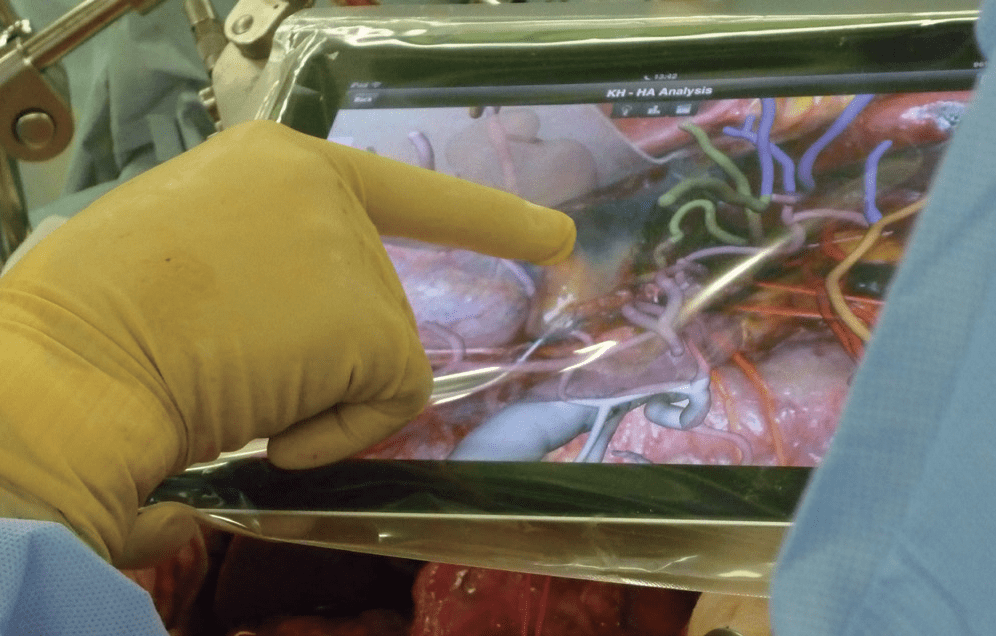

The main application of AR in surgical training is telementoring, i.e. the supervisor teaches the trainee by demonstrating the proper surgical moves, paths and handlings on the AR screen. These parts of information are displayed to the trainees while guiding them. As a learning tool, AR provides the key benefit of creating a highly engaging and immersive educational experience by combining different sensory inputs [4].

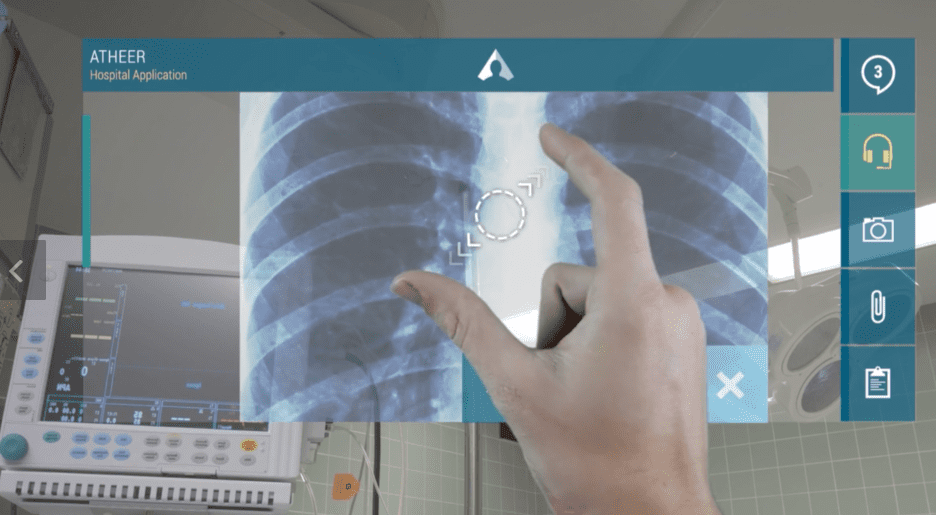

Surgery

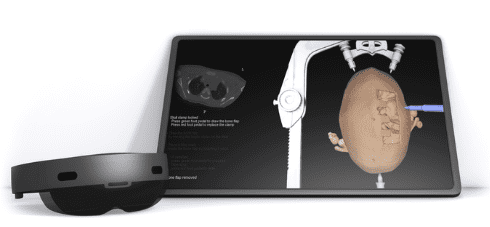

AR finds the most applications in surgery such as nephrectomy, neurosurgery, orthopaedics, and particularly laparoscopic surgery. Reliability and realism are extremely important factors in these fields, not only for the comfort of the user but also for assuring that no inappropriate handling will be learnt and that the actual traumatic conditions will be recreated. AR demonstrates certain advantages, such as minimal cost per use, the absence of ethical issues, and safety as compared to training on actual patients [5].

Oral and maxillofacial surgery (OMS) is one sensitive and narrow spatial surgery that requires high accuracy in image registration and low processing time of the system. The current systems suffer from image registration problems while matching two different posture images. Researchers from Charles Sturt University, Australia developed a system to improve the visualization and fast augmented reality system for OMS. The proposed system achieved an improvement in overlay accuracy and processing time [6].

Surgical navigation is essential to perform complex operations accurately and safely. The traditional navigation interface does not display the total spatial information for the lesion area as it is intended only for two-dimensional observation. Researchers from Soochow University, China have applied augmented reality (AR) technology to spinal surgery to provide more intuitive information to surgeons. A virtual and real registration technique based on an improved identification method and robot-assisted method was proposed to improve the accuracy of virtual and real registration. The effectiveness of the puncture performed by the robot was verified by X-ray images. It was noticed that the two optimized methods are highly effective. The proposed AR navigation system has high accuracy and stability, and is expected to become a valuable tool in future spinal surgery [7].